Image stacking is a technique used in astrophotography to reduce noise and recover signal. It means taking multiple shots of object (usually long exposures) and combing them to create a cleaner image.

Let’s get back to basics: what is a digital image? Simply put, an image is a collection of pixel values organized as rows and columns. The pixel is the smallest unit of an image, so a single pixel value is a number. For an 8-bit image, pixel values range from 0 to 255. Color images contains red, green, and blue channels, but for simplicity’s sake, we’ll discuss a grayscale image here.

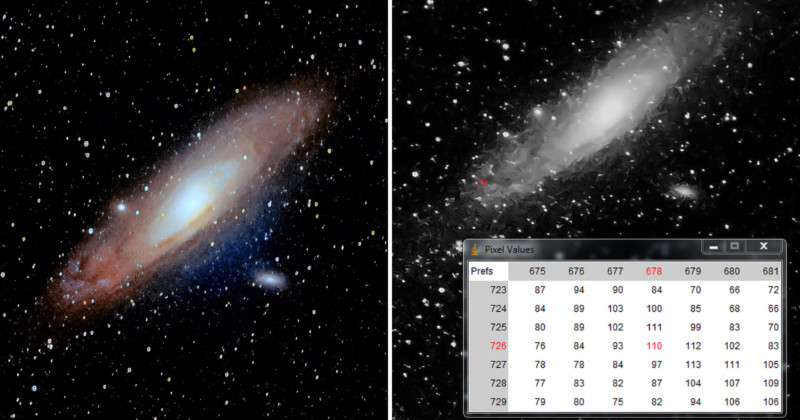

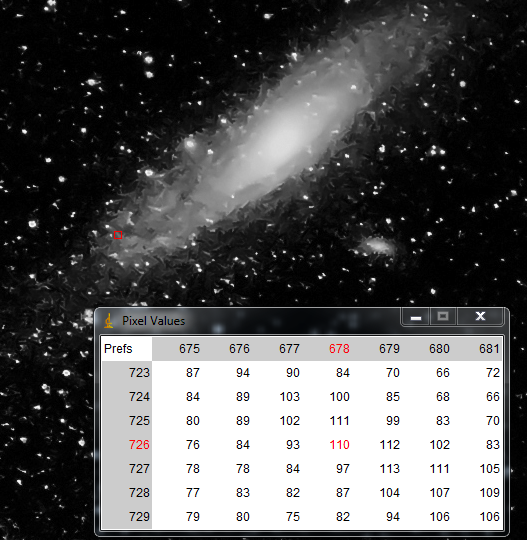

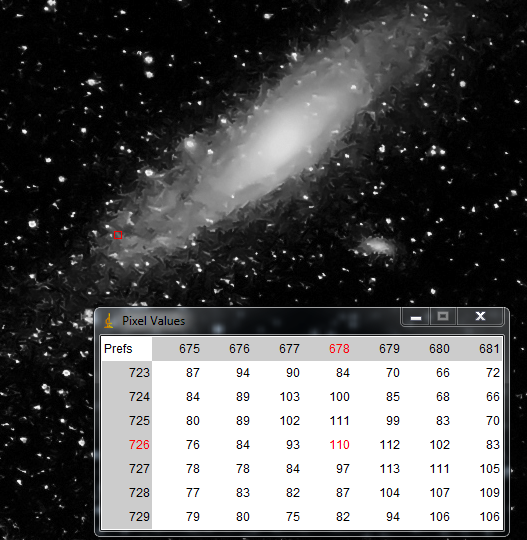

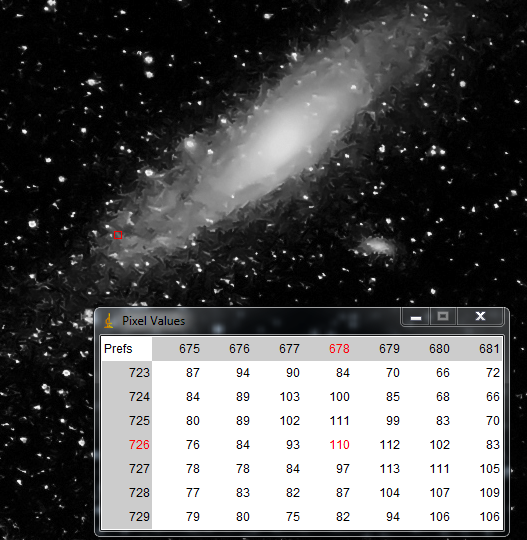

For example, here’s a shot of the Andromeda galaxy in grayscale (original image here). I’ve marked a region and displayed its pixel values below, highlighted in red:

In astrophotography we may take photos of galaxies, nebulae, and other celestial objects that are very faint. To overcome this limitation, multiple long exposure photos are taken and stacked. A single image can have different kinds of noise—dark current noise, pattern noise, etc.—and the signal-to-noise-ratio of the image can be improved by stacking. The higher the signal-to-noise ratio (SNR) of the image, the better the image quality. Simply as that.

The signal-to-noise ratio, or S/N, increases by the square root of the number of images in the stack. For example: if we use 9 images to create a stack, the signal-to-noise ratio improves by a factor of 3; if we use 100 images, the S/N improves by a factor of 10, and so on.

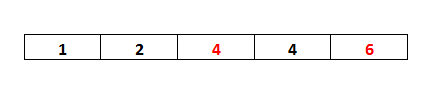

Now, let’s examine how stacking reduces noise and recovers signal. For the sake of simplicity, consider a image without noise (1 row and 5 columns) as shown below. It has only 5 pixels with values 1, 2, 3, 4, and 5:

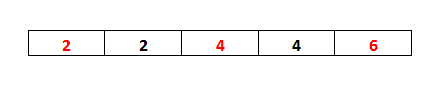

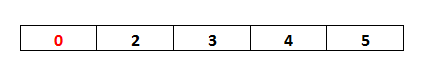

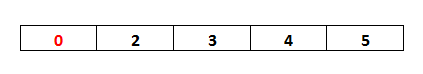

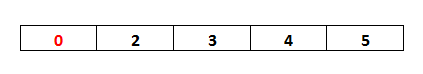

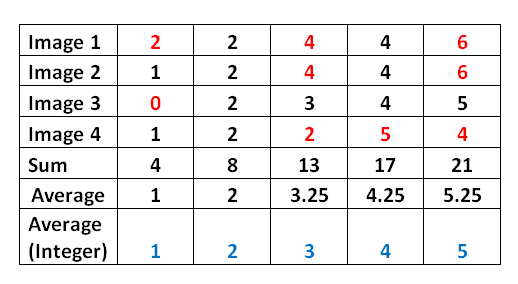

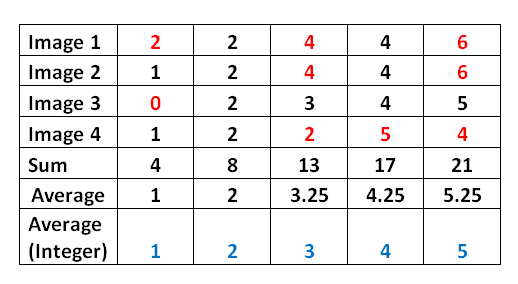

When we capture this image using a camera, we may get some noise along with the signal. That will change some of its pixel values (red numbers) from signal to noise; to reduce noise, a total of 4 images are captured for stacking:

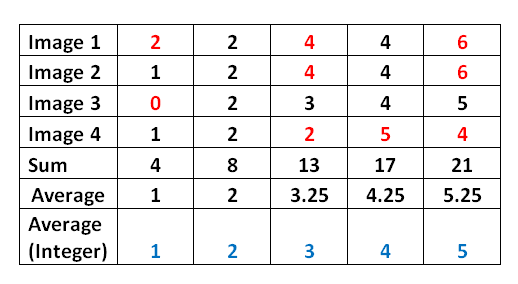

We’ve now got 4 images for processing; the next step is the stacking. The pixel values of each images are averaged in order to get a value as shown below:

Average of the image pixels is 1, 2, 3.25, 4.25, 5.25; rounding to the nearest integer, we get 1, 2, 3, 4, 5. This is the original image, thus stacking helped to reduce noise in the images we are able to recover signal or improved signal-to-noise ratio of the image. The more images we capture and stack, the closer our average pixel values will get to 1, 2, 3, 4, 5.

This is the simplest explanation of how image stacking works, I hope you enjoyed reading the article!

About the author: Rupesh Sreeraman is an engineer, photographer, astronomer, and the creator of ExMplayer and NeuralStyler AI. You can find more of his work on Flickr, Instagram, and GitHub. This post was also published here.